Filling In Json Template Llm

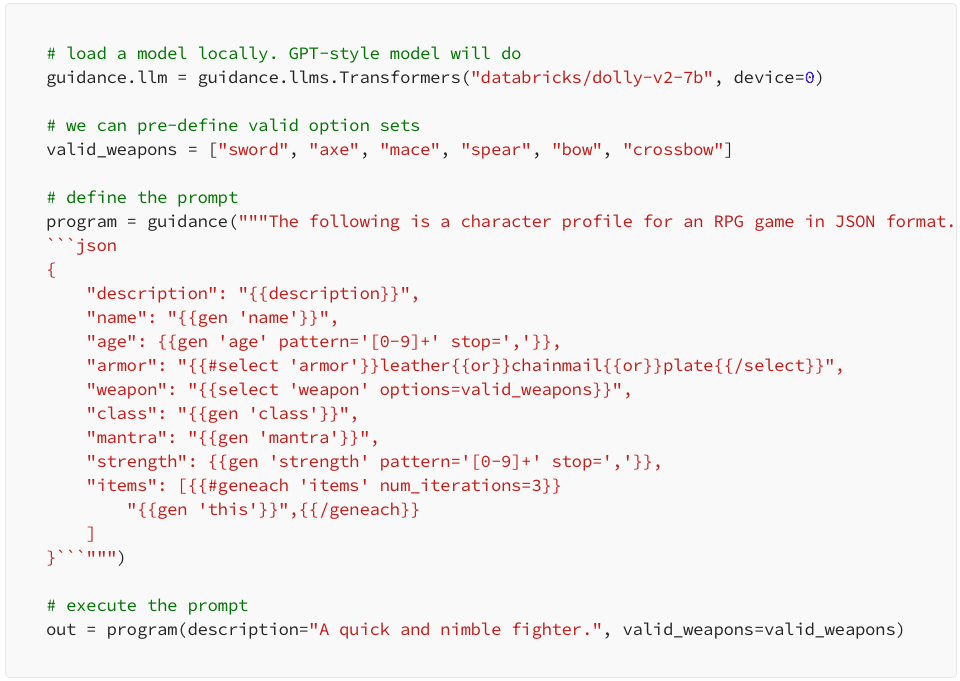

Filling In Json Template Llm - This functions wraps a prompt with settings that ensure the llm response is a valid json object, optionally matching a given json schema. Researchers developed medusa, a framework to speed up llm inference by adding extra heads to predict multiple tokens simultaneously. Understand how to make sure llm outputs are valid json, and valid against a specific json schema. Learn how to implement this in practice. The function can work with all models and. In this blog post, i will delve into a range of strategies designed to address this challenge. Vertex ai now has two new features, response_mime_type and response_schema that helps to restrict the llm outputs to a certain format. This post demonstrates how to use. Let’s take a look through an example main.py. Despite the popularity of these tools—millions of developers use github copilot []—existing evaluations of. Learn how to implement this in practice. We will explore several tools and methodologies in depth, each offering unique. This functions wraps a prompt with settings that ensure the llm response is a valid json object, optionally matching a given json schema. Training an llm to comprehend medical terminology, patient records, and confidential data, for instance, can be your objective if you work in the healthcare industry. It offers developers a pipeline to specify complex instructions, responses, and configurations. Structured json facilitates an unambiguous way to interact with llms. However, the process of incorporating variable. The function can work with all models and. Here are a couple of things i have learned: In this blog post, i will delve into a range of strategies designed to address this challenge. Defines a json schema using zod. Training an llm to comprehend medical terminology, patient records, and confidential data, for instance, can be your objective if you work in the healthcare industry. Structured json facilitates an unambiguous way to interact with llms. In this blog post, i will delve into a range of strategies designed to address this challenge. It offers. However, the process of incorporating variable. Show it a proper json template. Structured json facilitates an unambiguous way to interact with llms. Llm_template enables the generation of robust json outputs from any instruction model. Here are a couple of things i have learned: Understand how to make sure llm outputs are valid json, and valid against a specific json schema. In this blog post, i will delve into a range of strategies designed to address this challenge. This article explains into how json schema. Learn how to implement this in practice. Structured json facilitates an unambiguous way to interact with llms. Training an llm to comprehend medical terminology, patient records, and confidential data, for instance, can be your objective if you work in the healthcare industry. Reasoning=’a balanced strong portfolio suitable for most risk tolerances would allocate around. Defines a json schema using zod. Understand how to make sure llm outputs are valid json, and valid against a specific json schema.. Learn how to implement this in practice. Vertex ai now has two new features, response_mime_type and response_schema that helps to restrict the llm outputs to a certain format. We will explore several tools and methodologies in depth, each offering unique. Understand how to make sure llm outputs are valid json, and valid against a specific json schema. Researchers developed medusa,. Super json mode is a python framework that enables the efficient creation of structured output from an llm by breaking up a target schema into atomic components and then performing. Llm_template enables the generation of robust json outputs from any instruction model. Vertex ai now has two new features, response_mime_type and response_schema that helps to restrict the llm outputs to. Vertex ai now has two new features, response_mime_type and response_schema that helps to restrict the llm outputs to a certain format. Json schema provides a standardized way to describe and enforce the structure of data passed between these components. We will explore several tools and methodologies in depth, each offering unique. Here are a couple of things i have learned:. In this blog post, i will delve into a range of strategies designed to address this challenge. The function can work with all models and. Json schema provides a standardized way to describe and enforce the structure of data passed between these components. Super json mode is a python framework that enables the efficient creation of structured output from an. Show it a proper json template. Structured json facilitates an unambiguous way to interact with llms. However, the process of incorporating variable. Here are a couple of things i have learned: Despite the popularity of these tools—millions of developers use github copilot []—existing evaluations of. Here are a couple of things i have learned: This post demonstrates how to use. Learn how to implement this in practice. Despite the popularity of these tools—millions of developers use github copilot []—existing evaluations of. We will explore several tools and methodologies in depth, each offering unique. Vertex ai now has two new features, response_mime_type and response_schema that helps to restrict the llm outputs to a certain format. Show it a proper json template. Llm_template enables the generation of robust json outputs from any instruction model. This post demonstrates how to use. Despite the popularity of these tools—millions of developers use github copilot []—existing evaluations of. Let’s take a look through an example main.py. The function can work with all models and. Defines a json schema using zod. Learn how to implement this in practice. However, the process of incorporating variable. Structured json facilitates an unambiguous way to interact with llms. Training an llm to comprehend medical terminology, patient records, and confidential data, for instance, can be your objective if you work in the healthcare industry. This article explains into how json schema. Reasoning=’a balanced strong portfolio suitable for most risk tolerances would allocate around. In this you ask the llm to generate the output in a specific format. In this blog post, i will delve into a range of strategies designed to address this challenge.Practical Techniques to constraint LLM output in JSON format by

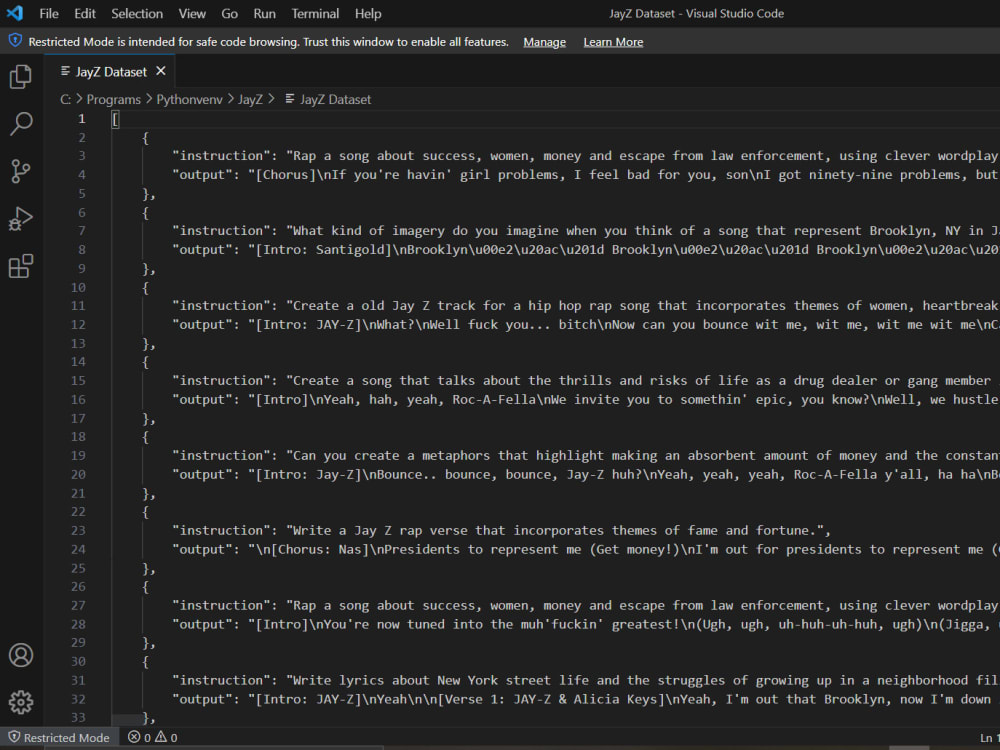

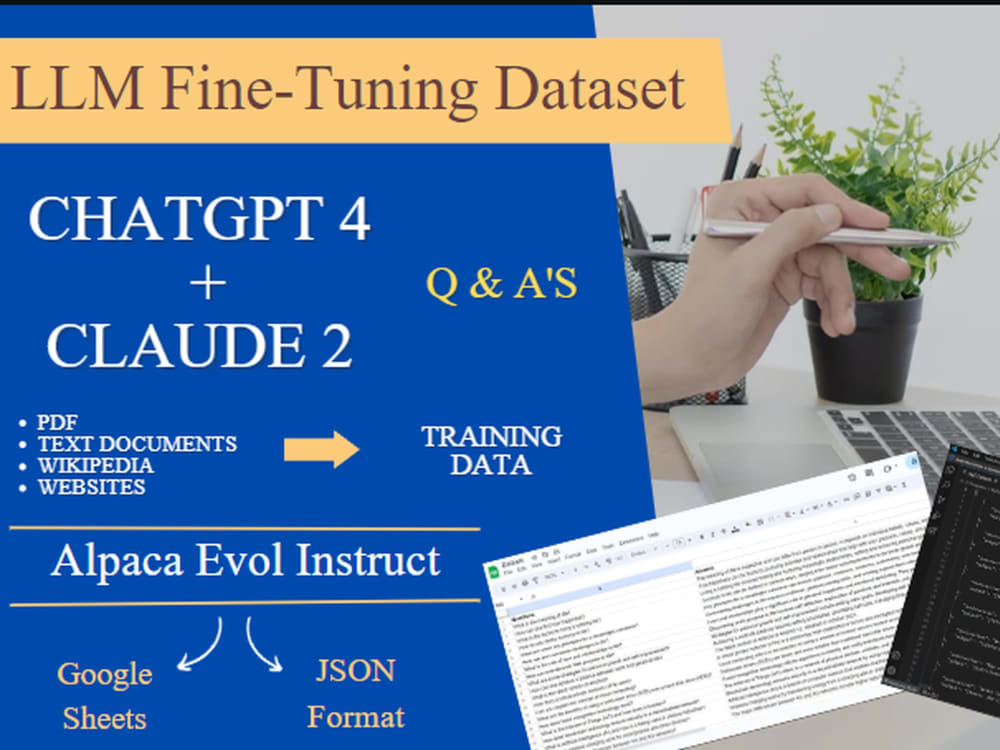

An instruct Dataset in JSON format made from your sources for LLM

An instruct Dataset in JSON format made from your sources for LLM

chatgpt How to generate structured data like JSON with LLM models

MLC MLCLLM Universal LLM Deployment Engine with ML Compilation

Dataset enrichment using LLM's Xebia

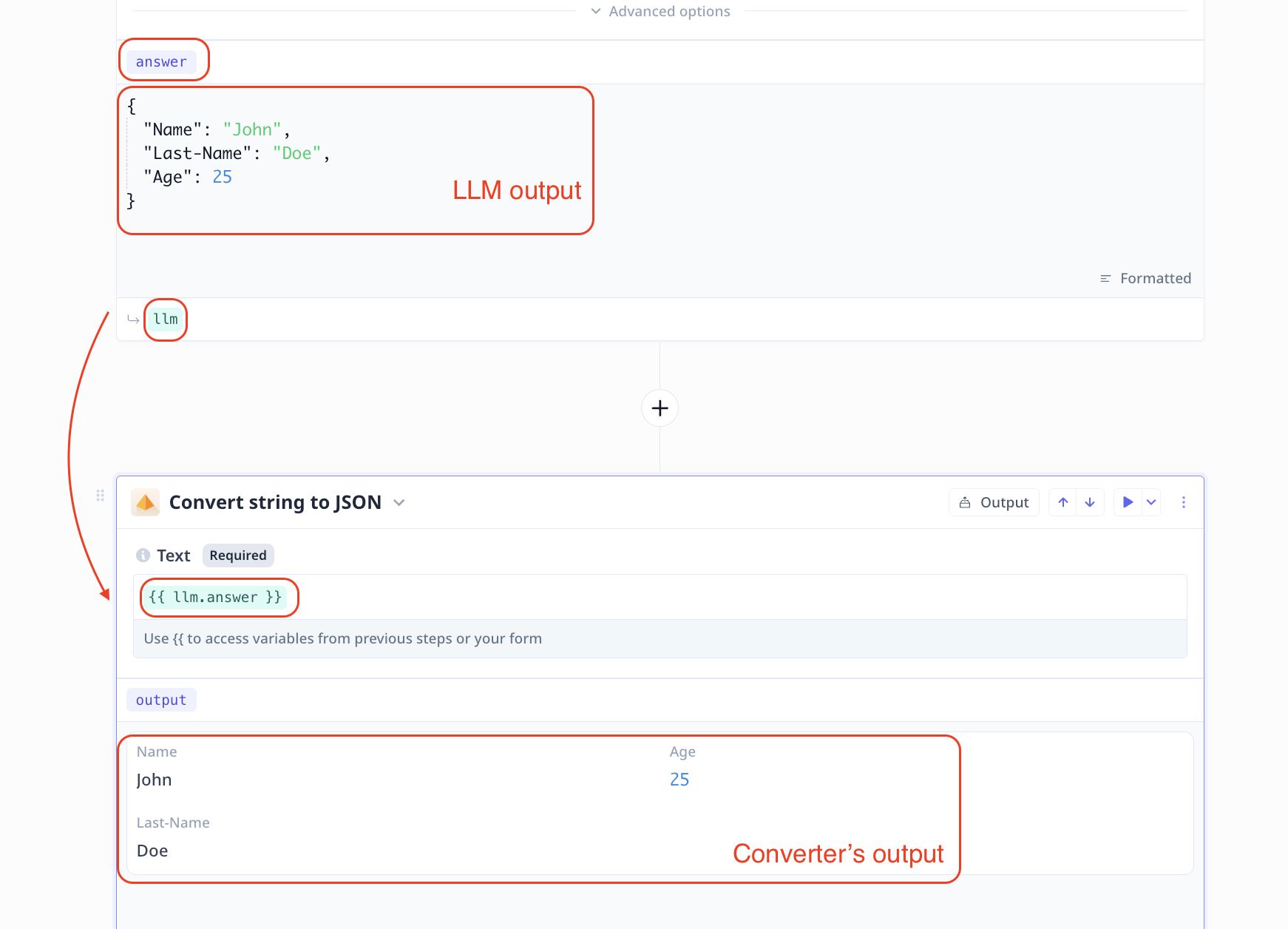

Large Language Model (LLM) output Relevance AI Documentation

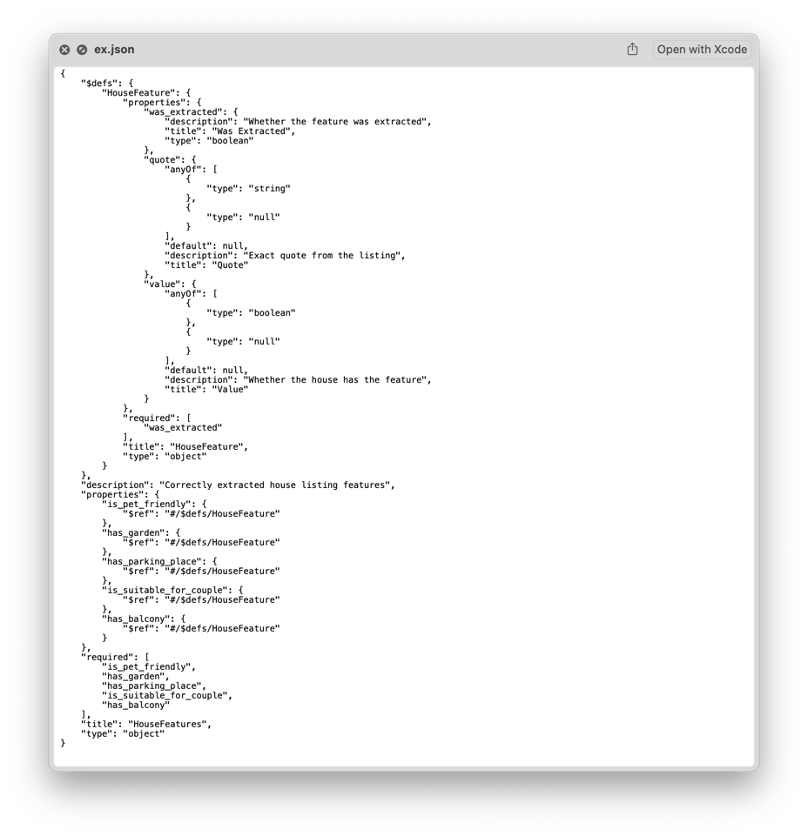

A Sample of Raw LLMGenerated Output in JSON Format Download

Crafting JSON outputs for controlled text generation Faktion

Practical Techniques to constraint LLM output in JSON format by

Super Json Mode Is A Python Framework That Enables The Efficient Creation Of Structured Output From An Llm By Breaking Up A Target Schema Into Atomic Components And Then Performing.

Understand How To Make Sure Llm Outputs Are Valid Json, And Valid Against A Specific Json Schema.

This Functions Wraps A Prompt With Settings That Ensure The Llm Response Is A Valid Json Object, Optionally Matching A Given Json Schema.

Researchers Developed Medusa, A Framework To Speed Up Llm Inference By Adding Extra Heads To Predict Multiple Tokens Simultaneously.

Related Post: